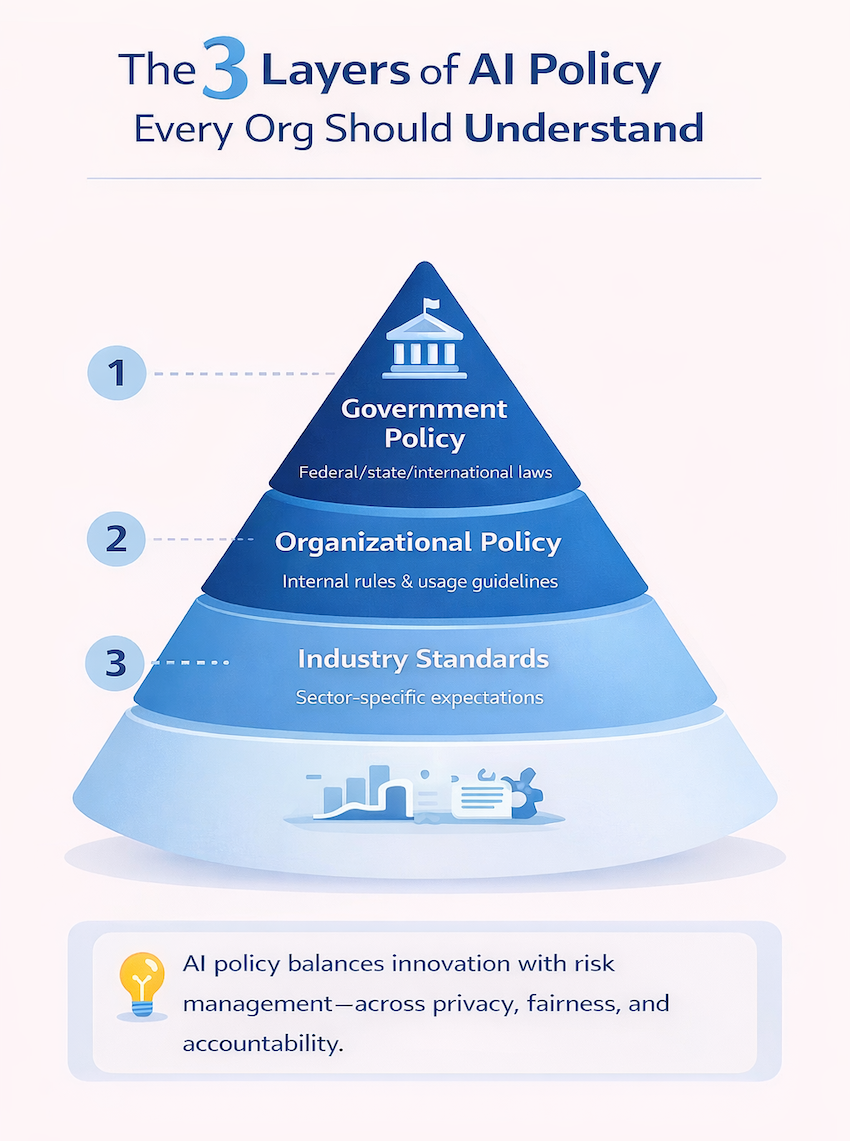

AI policy encompasses the guidelines, regulations, and frameworks that govern how organizations develop, deploy, and use artificial intelligence technologies. These policies exist at every level—from federal regulations and industry standards down to the internal rules your business creates for employees using AI tools.

The regulatory landscape is shifting fast, and organizations without clear AI governance face mounting compliance risks. This guide covers the core standards shaping AI policy today, current regulatory developments, and practical steps for building an AI policy that protects your organization.

AI policy refers to guidelines, regulations, and strategies that govern how artificial intelligence gets developed, deployed, and used. These policies balance innovation with risk mitigation across areas like data privacy, bias prevention, transparency, and accountability. Whether you’re looking at government regulations or internal company rules, AI policy creates the framework for responsible AI use.

You’ll encounter AI policy at three distinct levels:

The timing matters here. AI capabilities have expanded faster than regulations can keep up, and organizations that wait for perfect clarity often find themselves scrambling when new requirements take effect. Getting ahead of AI policy now prevents compliance headaches later.

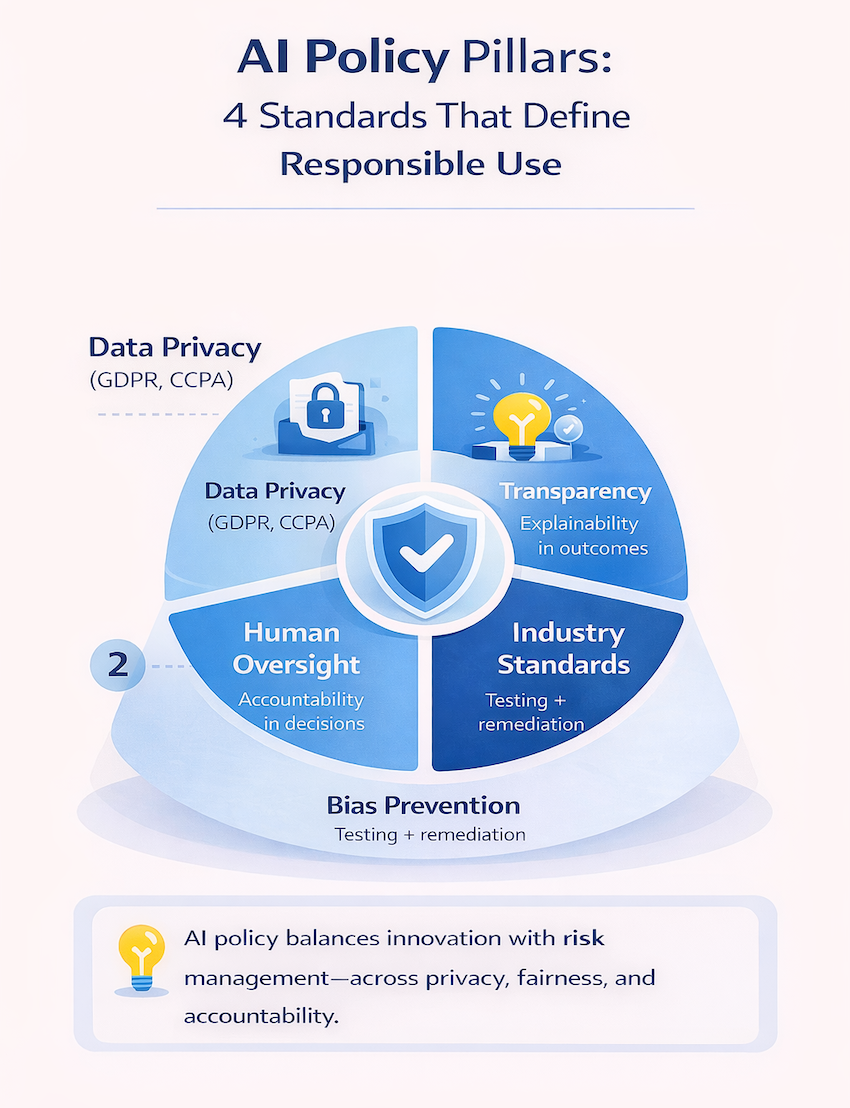

Most AI policies—whether from government regulators or corporate legal teams—address the same foundational principles. Once you understand these building blocks, evaluating any AI policy framework becomes much easier.

AI systems process large volumes of data, which triggers privacy obligations under frameworks like GDPR in Europe and CCPA in California. Even if your organization isn’t directly subject to these regulations, the vendors you use likely are.

The core requirement is straightforward: organizations collecting or processing personal data through AI need clear legal bases for that processing, appropriate security measures, and defined retention limits.

Explainability means the ability to understand how an AI system reaches its decisions. Regulators and customers increasingly demand transparency in AI-driven outcomes, particularly when those outcomes affect access to credit, employment, healthcare, or housing.

This doesn’t mean every AI decision requires a detailed technical breakdown. However, organizations typically provide meaningful information about what factors influenced a decision and how individuals can request a review.

High-stakes AI decisions generally require human review—a concept called “human-in-the-loop.” A qualified person reviews AI recommendations before final decisions get made, particularly in areas like hiring, lending, or medical diagnosis.

The goal isn’t slowing down AI systems. Instead, human oversight ensures someone with appropriate authority can intervene when AI outputs seem incorrect or unfair.

Algorithmic bias occurs when AI systems produce systematically unfair outcomes for certain groups. Policies increasingly require organizations to test AI systems for discriminatory results before deployment and monitor them continuously afterward.

Testing alone isn’t sufficient. Organizations also document processes for addressing bias when discovered and the steps taken to fix issues.

The AI regulatory landscape evolves quickly. While specific rules change frequently, understanding the direction of regulatory momentum helps organizations prepare for what’s coming.

Federal agencies have taken significant action on AI governance. NIST released the AI Risk Management Framework, providing voluntary guidance that many organizations treat as a de facto standard. The FTC has signaled aggressive enforcement priorities around deceptive AI practices and algorithmic discrimination.

CISA has published AI security guidance focused on critical infrastructure, while sector-specific regulators develop AI requirements for their industries.

States often move faster than the federal government on AI regulation. Several states have enacted or proposed laws addressing AI in hiring, insurance underwriting, and consumer protection.

This patchwork creates compliance complexity for organizations operating across multiple states. Monitoring state-level developments has become essential for managing risk effectively.

The EU AI Act represents the most comprehensive AI regulatory framework globally, and its influence extends to US businesses serving European customers or using European data. Other jurisdictions are developing their own approaches, creating a complex international landscape.

Organizations with any international presence—even through cloud services or vendor relationships—benefit from tracking global regulatory trends.

The relationship between artificial intelligence and government involves multiple agencies with overlapping responsibilities. Understanding this structure helps you identify which requirements apply to your organization.

Several federal agencies play distinct roles in AI governance:

No single agency has comprehensive AI authority, which means organizations often face requirements from multiple sources simultaneously.

Congress continues developing AI legislation addressing liability, transparency, and sector-specific rules. While predicting specific outcomes remains difficult, the themes under consideration—accountability, disclosure requirements, and risk-based approaches—signal likely future requirements.

Organizations following legislative developments can begin preparing for probable requirements before they become mandatory.

Executive orders set federal priorities and direct agency action on AI. While they don’t create laws directly, they shape the regulatory environment and signal administration priorities.

Current federal strategy emphasizes maintaining US AI leadership while managing risks. This dual focus means policies generally aim to enable innovation rather than restrict it—but with increasing attention to safety and security requirements.

AI.gov serves as the central resource for federal AI initiatives and policy updates.

Security-focused directives affect organizations in critical infrastructure sectors equiring robust security measures and those contracting with the federal government. These requirements address AI system security, supply chain risks, and incident reporting obligations.

Federal AI policy also addresses workforce development and economic competitiveness. While these aspects may seem less immediately relevant to compliance, they influence funding priorities and may create opportunities for organizations investing in AI capabilities.

Moving from understanding external requirements to implementing internal policies requires a structured approach. The following steps provide a framework that organizations can adapt to their specific circumstances.

Start with an inventory of AI tools and systems already in use. Many organizations discover AI embedded in software they didn’t realize contained AI capabilities—from email filtering to customer service chatbots to analytics platforms.

Understanding your current AI footprint comes first, before establishing governance.

Establish what AI applications are permitted, restricted, or prohibited based on your risk tolerance and business operations. Not all AI uses carry equal risk—a spell-checker presents different concerns than an automated hiring tool.

Risk-based categorization helps focus governance resources where they matter most.

Assign clear roles for AI policy oversight. Depending on your organization’s size and structure, ownership might rest with IT, legal, compliance, or a cross-functional team.

What matters most is clarity about who makes decisions and who monitors compliance.

Policies require technical infrastructure for enforcement. This includes access controls, logging, monitoring, and security measures appropriate to the AI systems in use.

Organizations without dedicated IT security resources often find that managed IT partners can implement and maintain these controls more effectively than building capabilities internally.

Policy effectiveness depends on user awareness. Employees using AI tools benefit from understanding what’s permitted, what’s prohibited, and how to report concerns.

Training frequency and documentation requirements vary by industry and risk level. Annual training with updates for significant policy changes represents a reasonable baseline.

AI policy isn’t a one-time exercise. Organizations benefit from reviewing policies when new regulations take effect, when deploying new AI systems, after security incidents, and at minimum annually.

Building review triggers into your governance process helps ensure policies remain current.

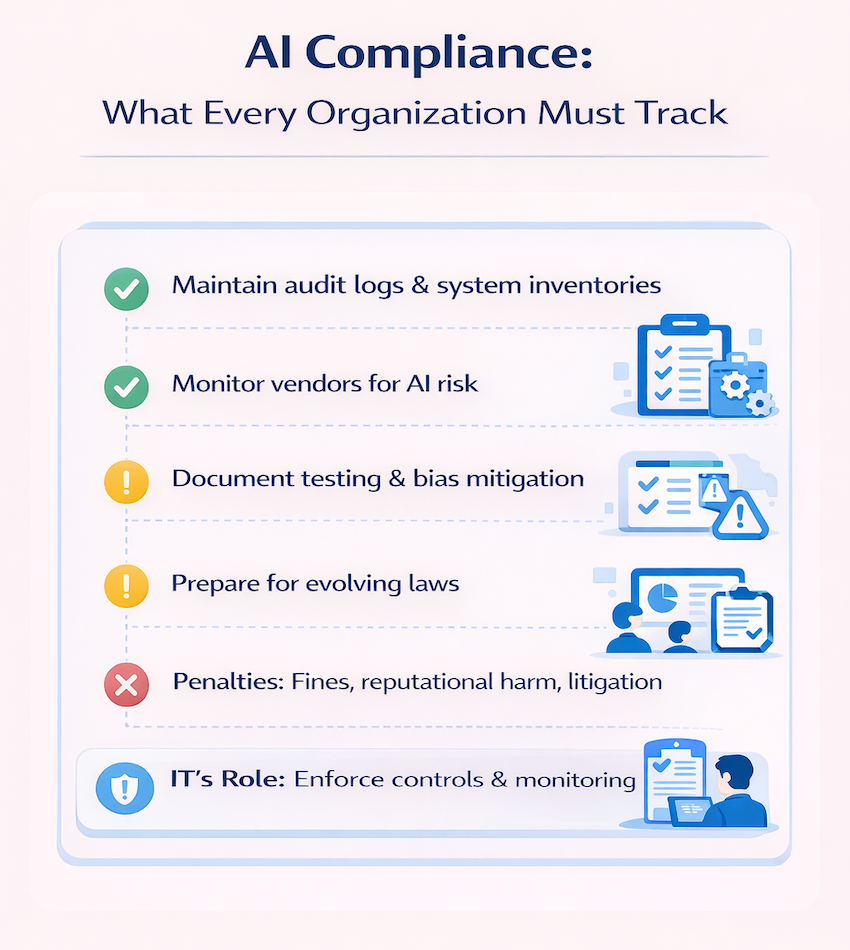

Creating a policy is only the beginning. Ongoing compliance management determines whether policies actually reduce risk.

Organizations demonstrate AI policy compliance through documentation, testing, and audits. This includes maintaining records of AI system inventories, risk assessments, testing results, and incident responses.

Audit requirements vary by industry, but building documentation practices early simplifies compliance as requirements evolve.

Enforcement consequences for AI violations include monetary fines, consent orders requiring operational changes, litigation exposure, and reputational harm. The specific penalties depend on which regulations apply and the nature of the violation.

Perhaps more significantly, AI-related incidents can damage customer trust in ways that take years to rebuild.

Many AI risks come from vendor tools rather than internally developed systems. Due diligence for AI-enabled software and services has become essential.

| Risk Category | Assessment Questions |

|---|---|

| Data handling | How does the vendor process and store data used by AI? |

| Transparency | Can the vendor explain how AI decisions are made? |

| Security | What security controls protect the AI system? |

| Compliance | Does the vendor certify compliance with relevant regulations? |

AI policy requirements vary significantly by sector. Understanding your industry’s specific obligations helps prioritize compliance efforts.

Healthcare organizations face particular AI policy challenges because AI systems often process protected health information. HIPAA requirements apply to AI systems just as they do to other technologies handling patient data.

Clinical decision support systems raise additional considerations around liability and physician oversight that healthcare-specific AI policies address.

Financial regulators focus on AI in trading, lending, and customer service. Fair lending requirements apply to AI-driven credit decisions, and regulators expect institutions to explain how AI models reach conclusions affecting consumers.

Operational AI in manufacturing raises safety and quality control considerations. AI systems controlling physical processes require different governance approaches than purely informational AI applications.

Implementing effective AI policy requires technical expertise and ongoing support. The infrastructure, monitoring, and security controls that make AI governance work don’t maintain themselves.

Organizations often find that building these capabilities internally diverts resources from core business activities. Managed IT partners provide the specialized expertise for AI policy compliance while allowing internal teams to focus on strategic priorities.

Book a consultation to discuss how IT GOAT can support your AI policy implementation with the technical infrastructure and monitoring capabilities that effective governance requires.

AI policy refers to formal rules and regulations with enforcement mechanisms, while AI ethics describes broader moral principles that may inform policy but don’t constitute binding requirements. An organization can be ethically questionable while remaining legally compliant—and vice versa.

Any organization using AI-enabled tools benefits from establishing usage guidelines to manage risk. The policy can be simpler than enterprise-level frameworks, but documenting acceptable use and basic security expectations provides meaningful protection.

Review AI policies when new regulations take effect, when deploying new AI systems, after security incidents, and at minimum annually. Building review triggers into governance processes helps ensure policies remain current as technology and regulations evolve.

Using AI without policies creates significant risk. Organizations benefit from establishing at least baseline acceptable use guidelines before deploying AI-enabled tools, even if comprehensive policies take longer to develop.

IT teams implement the technical controls, monitoring, and security infrastructure required to enforce AI policies and demonstrate compliance to regulators. Without appropriate technical implementation, policies remain aspirational rather than operational.

See the power of IT GOAT.

The world’s most advanced cybersecurity platform catered specifically to your business’ needs.

Keep up to date with our digest of trends & articles.

By subscribing, I agree to the use of my personal data in accordance with IT GOAT Privacy Policy. IT GOAT will not sell, trade, lease, or rent your personal data to third parties.

Mitigate All Types of Cyber Threats

Experience the full capabilities of our advanced cybersecurity platform through a scheduled demonstration. Discover how it can effectively protect your organization from cyber threats.

IT GOAT: Threat Intel & Cyber Analysis

We are experts in the field of cybersecurity, specializing in the identification and mitigation of advanced persistent threats, malware, and exploit development across all platforms.

Protect Your Business & Operations

Exceptional performance in the latest evaluations, achieving 100% prevention rate and providing comprehensive analytic coverage, unmatched visibility, and near-instant detection of threats.

We use cookies to enhance site performance and user experience. Your data stays private — we don’t sell your information or share it with unrelated third parties. To find out more about the cookies we use, view our Privacy Policy.